#challenges of artificial intelligence

Explore tagged Tumblr posts

Text

The Impact of Artificial Intelligence on Language Translation

Unlock business growth through seamless communication with potential customers using the power of Artificial Intelligence (AI). Experience the expanding impact of AI in rapidly translating essential documents and marketing content for global businesses, fostering revenue generation on a global scale.

0 notes

Text

Productivity tip: just stop trying.

Step 1: Focus. Step 2: Spiral. Step 3: Say you’ll try again tomorrow. — Vinn’s personal development strategy.

#funny#humor#meme#meme post#satire#funny memes#artificial intelligence#chatgpt#tumblr memes#lol memes#haha#lol#funny post#productivitytips#productivity challenge

23 notes

·

View notes

Text

what drives me a little insane about Charming Acres is that it's a male fantasy created by a scared white old man but it's also enforced by women (specifically the labor of women, more on Ms Dowling vs Cindy Smith later) who act a little bit like Mary/Eve, aka as regulators deciding who must stay (Cindy) and who must leave (Sunny). Much to think about that but also! It connects so wonderfully with my obession with the themes of "the danger/charm of families one marries into" and "access to the Normal World in SPN is always via a woman (and her labor)". The first pertains to John Winchester whose whole life was founded on lies upon lies, the second to his sons who seem to follow in his footsteps thinking that a woman would "fix" their issues and allow them to "escape" from their world, their reality. Women are fictions that can save (or damn), that can lock you out of heaven or welcome you back in. And, of course, these are all invented narratives (the madonna/whore dichotomy) but they also show how much these ideas are ingrained in the (male) characters' minds. They don't actually need a psychic making them "buy into" a fiction because, in a way, they've mind-controlled themselves into being slaves of these narratives . But they're also the perpetrators (the authors, if you will) of these narratives that they inflict upon others (for example, the women and men of Charming Acres) and, primarily, upon themselves (hence, Chip getting trapped in his own mind where he can be happy without harming others which, if we want to go there, can also translate into: in order to be happy you will inevitably hurt people). In other words, there is a "chip" inside these characters' minds that must be destroyed. So apart from madonna/whore (which is more related to possession and consent) the second interesting dichotomy in SPN is that of the master/slave (which is more related to mind control) which is, basically, the blueprint for all the other dichotomies in the show.

When, at the end of S14, Jack wills a world without lies into existence (committing yet another act of mind control) three things become violently apparent: the world itself is not as it is but it's as we narrate it to ourselves first and to others second, aka a fiction ("Breakdown" is also about this as well); lies are not only necessary but inherently essential for the world to function, aka lies are the basis of Order; Chuck doesn't simply act as a deus ex machina because, on the meta level, he's also an author here, an author that's actually needed in order to keep the illusion going, aka the world ceases to operate without a white man telling lies and pushing buttons. This means that there is no other option beyond the master/slave dichotomy which in turns means that the whole of S15 is just a display of suffering leading nowhere. Like, the show had made up its mind about the message it wanted to convey by "Moriah": you need an author because, without one, the world is in Chaos from which stories are impossible to tell because they need imagined and arbitrary beginnings and endings, aka they need some sort of Order AND "lies". Not only that, you need a hands-on God because without an author planning, scheming, deviating, inventing, interfering, aka being present, the story either ceases to exist or, if it still does exist, it's a boring story, a re-run nobody's really interested in anymore. And this is why, to me, all the meta madness of S15 is both cool (to a certain point) and dangerous (very much so) because it literalizes fiction which is always something very dangerous to do (see: the whole history of Christianity). But it also fictionalizes reality which is also very harmful, especially if the author god in question is a white man with close to limitless power and full-on unhingedness. So, in the end, women are fictions invented by men, about men and for other men, but so are men: fictions by men, about men and for other men. Ultimate reality is, therefore, the Fiction of Man, precisely of a white man, and there is no escape from that because the show cannot imagine power other than a relation between master and slave. And, look, my intentions are not to shit on SPN because at least a good 80% of TV shows are white man-centered and can't imagine power in any other way, but I hold a grudge towards its finale because I think SPN could've done a tiny bit better than describing Jack as "top dog" (which is in total and absolute contradiction with the fact that, apparently, Amara and Jack are in "harmony". I mean, come on, this is bad writing, period).

So Chuck's story, the Fiction of (White) Man can only "end" the same way it "began", which is to go back to the "In the beginning..." (and how many Genesis do we have in SPN?, it is a bit exhuasting, really, lol). In "Inherit the Earth" (and let's quickly remember who the subject of that sentence is: the meek, the meek shall inherit the earth), Chuck, not so subtly, reverted Earth to its "origins" and what we are left with are Dean, Sam and Jack, aka Adam, Eve and the snake. But this time there is no escape from this "Paradise" because, as I said above, the only ways to "escape" are via marrying into another family or via a woman's labor. In this episode, however, there is no "escape" because 1. the only family you can marry into is your own family (:|) and 2. there are no women (brrr). The only solution is, then, to go back to how things were before, to the status quo, by cheating and self-sacrifice.

Finally, in this light the "destiny/free will" dichotomy is reduced as follows: "destiny" is perceived as being (ab)used and controlled by a master (aka being a slave), therefore "free will" is being the one who (ab)uses and is in control (aka being the master). Which means that "free will" for one means "destiny" for another. Or: there is no real sense of collectiveness and community in the show (this is why the world must disappear and the only characters left are the ones mentioned above). I think, maybe, the answer is that, ultimately, control is never questioned because fundamentally the show thinks that control in the hands of one IS not only possible but necessary. And while it can go as far as to at least explore (to a very limited degree) why controlling someone else's body is (possession/consent etc), indeed, wrong, it just cannot do the same when it comes to a more "invisible" control, that of the mind. Which means that the show cannot imagine anything past the master/slave dichotomy. And I think this limitation was reinforced by the meta-fication of the show: on one hand it's a cool concept, on the other they put themselves into a corner because there is no show without someone actually controlling and performing the narrative both for an audience and for profit. Hence, the final shot where the fourth wall is broken, all masks are revealed and we're back to "Moriah" where Jack tells people to "stop lying": what remains is the audience feeling disappointed and realizing that we do want an Author because we want more fiction and we want our stories to make sense. We can even yield control for more fiction which is, of course, a human but also horrifying attitude (and by the way this is 100% relevant to contemporary discourses about generative AI and authorship so I predict SPN will keep interest people in the near future, mark my words).

Ultimately SPN feels "scammy" to me because it feels like Charming Acres: we as audience have been lured into this interesting fiction, the fiction we craved, but, at the very last second, the show has closed itself off into his own "fictionality". It's like "The Truman Show" where Truman is actually SPN that "excused" itself and went out the fake-sky door. Which turns us, the audience, into Christoff, the author/god/director who gets "defeated" by Truman leaving the stage, who gets to "spy" on the "author-less" characters against their fought for... "free will". SPN finale basically removes the author (here to be read as the people who create the story) as "filter" and leaves, on a meta level, the characters alone with the audience who, obvi, reveal themselves as actors because it's fiction, not reality, baby.

In other words, the audience can't "own" the story. We might be like Becky and, perhaps, even be better at writing that story but we're not its authors. Of course, this is totally an uncool way to end a story and a cruel low blow to the audience that actually made it even possible but, again, very relevant to the current scenario we live in: there is a metaphorical "chip" that feeds on our info, shapes our reality and is also generating our fictions. We're becoming authors, characters and audience of our own fictions but.... are they really our own? What do we see when we see our own narratives played out in front of our eyes? Can we claim authorship? Why do we want authorship and how fair is it to claim it as our own? Aren't we all a little bit characters trapped inside our minds? Can we escape from this? How does a manifest, individual "author" fare against an immanent, occult one? What are the alternatives we can come up with? Can we imagine power differently? These and many other questions may legitimately arise :P.

#i don't know how much sense i'm making but fuck it i'm gonna post it anyways#this fucking “chip as god” and “jack as god” are giving me thoughts about artificial intelligence and stories and i can't stop won't stop#anyway. yes. still be rambling about charming acres. don't mind me. or please do! Join me in my insanity!#perhaps they should've placed peace of mind BEFORE lebanon. because lebanon sort of closes this chapter of the narrative. at least for dean#uhm. yeah. well. i don't know. can't think about lebanon as well rn or my brain will melt like those people in charming acres lol#is lebanon the ultimate charming acres? lol#peace of mind#peace of heart#charming acres#spn#supernatural#spn s14#john winchester#cindy smith#ms dowling#sunny harrington#chip harrington#b/w spn#myths we live by#out of the womb and into the queue#spn s15#chuck shurley#ai and stories and authors and the related challenges

7 notes

·

View notes

Text

Day 29/30 of productivity

Watching AI class and studying Cloud Computing at the same time lol

#mine#studyblr#study#brazilian studyblr#30 days of productivity#30dop#30 day challenge#cloud computing#artificial intelligence#research scientist#student life

23 notes

·

View notes

Text

Creating Multiple Passive Income Streams on Autopilot

Have you ever wished you could earn money while working out, going to the gym, or simply relaxing with family? You’re not alone. As military members, time is a valuable resource. However, what if you could make your money work more efficiently than you do? Welcome to the world of passive income streams that operate automatically. In this article, we’ll break it all down step by step, no fluff, no…

#72-hour freedom challenge#9-5 Freedom Hacker Challenge#affiliate marketing#ai#AI Bots#artificial intelligence#digital marketing#Get Started#make money online

5 notes

·

View notes

Text

correcting some misinfo about ai i've seen on tumblr, because i've seen people very confidently saying some very incorrect things.

- generative ai is a family of techniques that has existed for decades and absolutely has use cases beyond the "write me [X]" or "draw me [X]" stuff that has now hit the mainstream. generative adversarial networks are a perfect example of this

- it's impossible to predict the energy or time cost of training and then using a model based on the output alone (e.g. if it's generative vs analytical). it varies wildly based on a million other factors. anyone trying to make blanket statements about training times is wrong

- ALL deep learning models cost exponentially more energy/time to train than to run normally. that is simply how training works.

- the vagueness of "AI" as a term is understandably frustrating but it is not some grand scheme to trick people. yes it has become a buzzword which has rendered it even more meaningless, but it has always been used as a loosely defined umbrella term to describe decision-making systems as a whole. yes i know it's stupid but it's been stupid since 1950 so welcome aboard.

- also, what seem like completely different instances of "AI" from a consumer standpoint often have a lot in common in their underlying techniques. the AI behind video game support characters and self-driving cars, or image generation and cancer detection, have more in common than you think, and calling them both AI is not misleading just because you like some uses and not others

source: i am getting a phd in artificial intelligence & robotics, specifically ethical human-ai interaction, and so i use these things every day. i am not working for any AI companies or trying to sell you anything. i just desperately want people to be informed enough to be critical of both pro- and anti-AI hype, and above all to understand that you can't neatly sort entire fields of research & technology into "good" and "evil," despite how tempting it can be.

#made a version without my little bitch session at the beginning lol#anyway. sam altman die challenge#ai#anti ai#artificial intelligence#misinformation#llm#chatgpt

4 notes

·

View notes

Text

#Wonderful world of chocolate

from my gallery of creations

2 notes

·

View notes

Text

I was talking to an executive producer yesterday about the Travels in Venice clip. The first things he asked me was basically running down the list of what was accomplished with AI, so...

Script by DeepSeek responding to information detailing target audience, episode focus, structure, style, host's personality, pacing, and desired themes.

Narration by "Sarah" from ElevenLabs text to speech. I could've used the voice changer function to do the read myself while changing my voice for Sarah's but chose to use Sarah's read straight outta the box.

Original photographs from Unsplash and Pixabay.

GenAI for expanding vertical photographs to 16x9 images by Adobe.

Video footage from photographs performed by RunwayML.

Music courtesy of Pixabay's free/royalty free music library.

SFX courtesy of Pixabay's free/royalty free sound effects library.

Okay.

So... today on Why Do This?

1) I wanna know what I can get away with by using AI tools beyond how much I believe I'm gonna use them in the future. In this special case, I used AI for everything but music, sound effects, and 16x9 photographs.

2) I wanna know what kind of challenges I run into using AI like this and how to work through or work around them and

3) I wanna sharpen my awareness for when AI is literally making stuff up no matter how natural it looks.

🤔🤔🤔

#venice#travels#ai#artificial intelligence#genai#adobe#elevenlabs#deepseek#runwayml#unsplash#pixabay#challenges#workarounds#hacks#ethicss

2 notes

·

View notes

Text

Scholars of antiquity believe they are on the brink of a new era of understanding after researchers armed with artificial intelligence read the hidden text of a charred scroll that was buried when Mount Vesuvius erupted nearly 2,000 years ago.

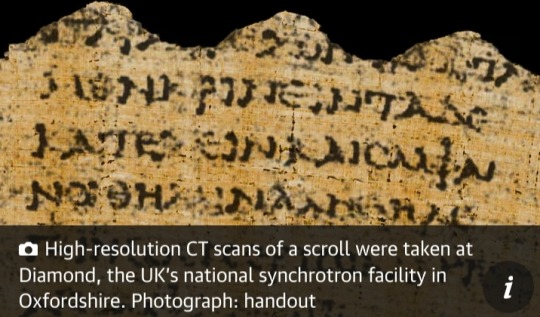

Hundreds of papyrus scrolls held in the library of a luxury Roman villa in Herculaneum were burned to a crisp when the town was devastated by the intense blast of heat, ash and pumice that destroyed nearby Pompeii in AD79.

Excavations in the 18th century recovered more than 1,000 whole or partial scrolls from the mansion, thought to be owned by Julius Caesar’s father-in-law.

However, the black ink was unreadable on the carbonised papyri and the scrolls crumbled to pieces when researchers tried to open them.

The breakthrough in reading the ancient material came from the $1m Vesuvius Challenge, a contest launched in 2023 by Brent Seales, a computer scientist at the University of Kentucky, and Silicon Valley backers.

The competition offered prizes for extracting text from high-resolution CT scans of a scroll taken at Diamond, the UK’s national synchrotron facility in Oxfordshire.

On Monday, Nat Friedman, a US tech executive and founding sponsor of the challenge, announced that a team of three computer-savvy students, Youssef Nader in Germany, Luke Farritor in the US, and Julian Schilliger in Switzerland, had won the $700,000 (£554,000) grand prize after reading more than 2,000 Greek letters from the scroll.

Papyrologists who have studied the text recovered from the blackened scroll were stunned at the feat.

“This is a complete gamechanger,” said Robert Fowler, emeritus professor of Greek at Bristol University and chair of the Herculaneum Society.

“There are hundreds of these scrolls waiting to be read.”

Dr Federica Nicolardi, a papyrologist at the University of Naples Federico II, added:

“This is the start of a revolution in Herculaneum papyrology and in Greek philosophy in general. It is the only library to come to us from ancient Roman times.”

“We are moving into a new era,” said Seales, who led efforts to read the scrolls by virtually unwrapping the CT images and training AI algorithms to detect the presence of ink.

He now wants to build a portable CT scanner to image scrolls without moving them from their collections.

In October, Farritor won the challenge’s $40,000 “first letters” prize when he identified the ancient Greek word for “purple” in the scroll.

He teamed up with Nader in November, with Schilliger, who developed an algorithm to automatically unwrap CT images, joining them days before the contest deadline on 31 December.

Together, they read more than 2,000 letters of the scroll, giving scholars their first real insight into its contents.

“It’s been an incredibly rewarding journey,” said Youssef.

“The adrenaline rush is what kept us going. It was insane. It meant working 20-something hours a day. I didn’t know when one day ended and the next day started.”

“It probably is Philodemus,” Fowler said of the author.

“The style is very gnarly, typical of him, and the subject is up his alley.”

The scroll discusses sources of pleasure, touching on music and food – capers in particular – and whether the pleasure experienced from a combination of elements owes to the major or minor constituents, the abundant or the scare.

“In the case of food, we do not right away believe things that are scarce to be absolutely more pleasant than those which are abundant,” the author writes.

“I think he’s asking the question: what is the source of pleasure in a mix of things? Is it the dominant element, is it the scarce element, or is it the mix itself?” said Fowler.

The author ends with a parting shot against his philosophical adversaries for having “nothing to say about pleasure, either in general or particular."

Seales and his research team spent years developing algorithms to digitally unwrap the scrolls and detect the presence of ink from the changes it produced in the papyrus fibres.

He released the algorithms for contestants to build on in the challenge.

Friedman’s involvement proved valuable not only for attracting financial donors.

When Seales was meant to fly to the UK to have a scroll scanned, a storm blew in cancelling all commercial flights.

Worried they might lose their slot at the Diamond light source, Friedman hastily organised a private jet for the trip.

Beyond the hundreds of Herculaneum scrolls waiting to be read, many more may be buried at the villa, adding weight to arguments for fresh excavations.

"The same technology could be applied to papyrus wrapped around Egyptian mummies," Fowler said.

These could include everything from letters and property deeds to laundry lists and tax receipts, shining light on the lives of ordinary ancient Egyptians.

“There are crates of this stuff in the back rooms of museums,” Fowler said.

The challenge continues this year with the goal to read 85% of the scroll and lay the foundations for reading all of those already excavated.

Scientists need to fully automate the process of tracing the surface of the papyrus inside each scroll and improve ink detection on the most damaged parts.

“When we launched this less than a year ago, I honestly wasn’t sure it’d work,” said Friedman.

“You know, people say money can’t buy happiness, but they have no imagination. This has been pure joy. It’s magical what happened, it couldn’t have been scripted better."

Source: The Guardian

youtube

How the Herculaneum Papyri were carbonised in the Mount Vesuvius eruption – Video

5 February 2024

#Herculaneum Papyri#Mount Vesuvius#volcanic eruption#antiquity#artificial intelligence#papyrus scrolls#Pompeii#AD79#Herculaneum#Youtube#carbonised papyri#$1m Vesuvius Challenge#Brent Seales#Diamond#papyrologist#Herculaneum papyrology#Ancient Rome#CT images#ai algorithms

13 notes

·

View notes

Text

Quantum Computing: How Close Are We to a Technological Revolution?

1. Introduction Brief overview of quantum computing. Importance of quantum computing in the future of technology. 2. Understanding Quantum Computing Explanation of qubits, superposition, and entanglement. How quantum computing differs from classical computing. 3. The Current State of Quantum Computing Advances by major players (Google, IBM, Microsoft). Examples of quantum computing…

View On WordPress

#Artificial Intelligence#Climate Modeling#Economic Impact#Financial Modeling#Future of Computing#Future Technology#Global Tech Race#IBM#Machine Learning#NQM#Pharmaceutical Research#Qbits#Quantum Algorithms#Quantum Challenges#Quantum Computing#Quantum Cryptography#Quantum Hardware#Quantum Research#Quantum Supremacy#Tech Innovation#Tech Investments#Technology Trends

3 notes

·

View notes

Text

A charming lady (AI Facsimile) weaves a poetic tapestry of nature’s most graceful signs!

#AI#Artificial Intelligence#Lip Syncing via AI#Adobe After Effects CC#Photoshop#AI Facsimile#Poem#Poetry#Talisman#Good Luck#Animals#The Wild#Omens#Signs#Joy#Hope#Magic#Motivation#Inspiration#Empowerment#Moving Forward#Challenges#Bravery#Courage#TheJourneyIsTheReward

2 notes

·

View notes

Note

Hello

I hope you are well I'm Hanaa 🍉🍉. . Married and have a two-year-old son named Yousef

Sorry if I'm bothering you and asking for help, I feel very embarrassed and embarrassed to try to ask for help.

You are someone with many fans and followers.

Can you help me get my voice heard and share my family's story?

By helping to reblog my post from my blog, you could save a family from death and war. lost part of my family😭, my home, and everything I own. We live in difficult circumstances. I hope you can help me by donating even something small or sharing 🙏

Note:My old Tumblr account was deactivated, and I need your support again

My campaign has been checked by 90ghost🫂

Thank you from the bottom of my heart for any help you can provide.

sure thing

write a short 1 page or less synopsis of the situation, and i will blog it

#youtube#100 days of productivity#academia#artificial intelligence#bmw#productivity challenge#tumblr milestone#amigurumi#ancient egypt#applique

2 notes

·

View notes

Text

Come on over and subscribe to my Newsletter for regular updates on my art and bookmaking!

A big part of my coping mechanism, as an artist, seeing a machine shamelessly steal from me and my community, is talking about it. That's what my letter is. A conversation. Please Join in on it!

#artists on tumblr#love letters#artwork#link#marketing#making things#making art#telling stories#illustration#artistic#creative inspiration#newsletter#storyteller#editor#comic book artist#comic art#comic creator#comic lover#story#socialite#anarchist#controversy#rule breaker#art challenge#ai issues#artificial intelligence#ai is a plague#future of ai#future of artificial intelligence#midjourney

2 notes

·

View notes

Text

Fully Automated Online Business: How to Transition from a 9-to-5 Job

Breaking Free from the 9-to-5 Mindset Have you ever felt like your job is a cage, keeping you trapped in the same daily routine? We know it’s easy to feel secure with regular paychecks, but this “comfort zone” can actually limit your potential. Think about your job as training wheels on a bike: they’re helpful at first, but eventually, they hold you back from riding freely. Recognizing when…

#72-hour freedom challenge#affiliate marketing#artificial intelligence#digital marketing#freedom breakthrough#Get Started#make money online#Military Veteran#online business

5 notes

·

View notes

Text

#aiartist#artwork#concept art#artificial intelligence#anime#character concept#art#character desing challenge#character illustration#aidesign#aiart

3 notes

·

View notes

Text

AI Doesn’t Necessarily Give Better Answers If You’re Polite

New Post has been published on https://thedigitalinsider.com/ai-doesnt-necessarily-give-better-answers-if-youre-polite/

AI Doesn’t Necessarily Give Better Answers If You’re Polite

Public opinion on whether it pays to be polite to AI shifts almost as often as the latest verdict on coffee or red wine – celebrated one month, challenged the next. Even so, a growing number of users now add ‘please’ or ‘thank you’ to their prompts, not just out of habit, or concern that brusque exchanges might carry over into real life, but from a belief that courtesy leads to better and more productive results from AI.

This assumption has circulated between both users and researchers, with prompt-phrasing studied in research circles as a tool for alignment, safety, and tone control, even as user habits reinforce and reshape those expectations.

For instance, a 2024 study from Japan found that prompt politeness can change how large language models behave, testing GPT-3.5, GPT-4, PaLM-2, and Claude-2 on English, Chinese, and Japanese tasks, and rewriting each prompt at three politeness levels. The authors of that work observed that ‘blunt’ or ‘rude’ wording led to lower factual accuracy and shorter answers, while moderately polite requests produced clearer explanations and fewer refusals.

Additionally, Microsoft recommends a polite tone with Co-Pilot, from a performance rather than a cultural standpoint.

However, a new research paper from George Washington University challenges this increasingly popular idea, presenting a mathematical framework that predicts when a large language model’s output will ‘collapse’, transiting from coherent to misleading or even dangerous content. Within that context, the authors contend that being polite does not meaningfully delay or prevent this ‘collapse’.

Tipping Off

The researchers argue that polite language usage is generally unrelated to the main topic of a prompt, and therefore does not meaningfully affect the model’s focus. To support this, they present a detailed formulation of how a single attention head updates its internal direction as it processes each new token, ostensibly demonstrating that the model’s behavior is shaped by the cumulative influence of content-bearing tokens.

As a result, polite language is posited to have little bearing on when the model’s output begins to degrade. What determines the tipping point, the paper states, is the overall alignment of meaningful tokens with either good or bad output paths – not the presence of socially courteous language.

An illustration of a simplified attention head generating a sequence from a user prompt. The model starts with good tokens (G), then hits a tipping point (n*) where output flips to bad tokens (B). Polite terms in the prompt (P₁, P₂, etc.) play no role in this shift, supporting the paper’s claim that courtesy has little impact on model behavior. Source: https://arxiv.org/pdf/2504.20980

If true, this result contradicts both popular belief and perhaps even the implicit logic of instruction tuning, which assumes that the phrasing of a prompt affects a model’s interpretation of user intent.

Hulking Out

The paper examines how the model’s internal context vector (its evolving compass for token selection) shifts during generation. With each token, this vector updates directionally, and the next token is chosen based on which candidate aligns most closely with it.

When the prompt steers toward well-formed content, the model’s responses remain stable and accurate; but over time, this directional pull can reverse, steering the model toward outputs that are increasingly off-topic, incorrect, or internally inconsistent.

The tipping point for this transition (which the authors define mathematically as iteration n*), occurs when the context vector becomes more aligned with a ‘bad’ output vector than with a ‘good’ one. At that stage, each new token pushes the model further along the wrong path, reinforcing a pattern of increasingly flawed or misleading output.

The tipping point n* is calculated by finding the moment when the model’s internal direction aligns equally with both good and bad types of output. The geometry of the embedding space, shaped by both the training corpus and the user prompt, determines how quickly this crossover occurs:

An illustration depicting how the tipping point n* emerges within the authors’ simplified model. The geometric setup (a) defines the key vectors involved in predicting when output flips from good to bad. In (b), the authors plot those vectors using test parameters, while (c) compares the predicted tipping point to the simulated result. The match is exact, supporting the researchers’ claim that the collapse is mathematically inevitable once internal dynamics cross a threshold.

Polite terms don’t influence the model’s choice between good and bad outputs because, according to the authors, they aren’t meaningfully connected to the main subject of the prompt. Instead, they end up in parts of the model’s internal space that have little to do with what the model is actually deciding.

When such terms are added to a prompt, they increase the number of vectors the model considers, but not in a way that shifts the attention trajectory. As a result, the politeness terms act like statistical noise: present, but inert, and leaving the tipping point n* unchanged.

The authors state:

‘[Whether] our AI’s response will go rogue depends on our LLM’s training that provides the token embeddings, and the substantive tokens in our prompt – not whether we have been polite to it or not.’

The model used in the new work is intentionally narrow, focusing on a single attention head with linear token dynamics – a simplified setup where each new token updates the internal state through direct vector addition, without non-linear transformations or gating.

This simplified setup lets the authors work out exact results and gives them a clear geometric picture of how and when a model’s output can suddenly shift from good to bad. In their tests, the formula they derive for predicting that shift matches what the model actually does.

Chatting Up..?

However, this level of precision only works because the model is kept deliberately simple. While the authors concede that their conclusions should later be tested on more complex multi-head models such as the Claude and ChatGPT series, they also believe that the theory remains replicable as attention heads increase, stating*:

‘The question of what additional phenomena arise as the number of linked Attention heads and layers is scaled up, is a fascinating one. But any transitions within a single Attention head will still occur, and could get amplified and/or synchronized by the couplings – like a chain of connected people getting dragged over a cliff when one falls.’

An illustration of how the predicted tipping point n* changes depending on how strongly the prompt leans toward good or bad content. The surface comes from the authors’ approximate formula and shows that polite terms, which don’t clearly support either side, have little effect on when the collapse happens. The marked value (n* = 10) matches earlier simulations, supporting the model’s internal logic.

What remains unclear is whether the same mechanism survives the jump to modern transformer architectures. Multi-head attention introduces interactions across specialized heads, which may buffer against or mask the kind of tipping behavior described.

The authors acknowledge this complexity, but argue that attention heads are often loosely-coupled, and that the sort of internal collapse they model could be reinforced rather than suppressed in full-scale systems.

Without an extension of the model or an empirical test across production LLMs, the claim remains unverified. However, the mechanism seems sufficiently precise to support follow-on research initiatives, and the authors provide a clear opportunity to challenge or confirm the theory at scale.

Signing Off

At the moment, the topic of politeness towards consumer-facing LLMs appears to be approached either from the (pragmatic) standpoint that trained systems may respond more usefully to polite inquiry; or that a tactless and blunt communication style with such systems risks to spread into the user’s real social relationships, through force of habit.

Arguably, LLMs have not yet been used widely enough in real-world social contexts for the research literature to confirm the latter case; but the new paper does cast some interesting doubt upon the benefits of anthropomorphizing AI systems of this type.

A study last October from Stanford suggested (in contrast to a 2020 study) that treating LLMs as if they were human additionally risks to degrade the meaning of language, concluding that ‘rote’ politeness eventually loses its original social meaning:

[A] statement that seems friendly or genuine from a human speaker can be undesirable if it arises from an AI system since the latter lacks meaningful commitment or intent behind the statement, thus rendering the statement hollow and deceptive.’

However, roughly 67 percent of Americans say they are courteous to their AI chatbots, according to a 2025 survey from Future Publishing. Most said it was simply ‘the right thing to do’, while 12 percent confessed they were being cautious – just in case the machines ever rise up.

* My conversion of the authors’ inline citations to hyperlinks. To an extent, the hyperlinks are arbitrary/exemplary, since the authors at certain points link to a wide range of footnote citations, rather than to a specific publication.

First published Wednesday, April 30, 2025. Amended Wednesday, April 30, 2025 15:29:00, for formatting.

#2024#2025#ADD#Advanced LLMs#ai#AI chatbots#AI systems#Anderson's Angle#Artificial Intelligence#attention#bearing#Behavior#challenge#change#chatbots#chatGPT#circles#claude#coffee#communication#compass#complexity#content#Delay#direction#dynamics#embeddings#English#extension#focus

2 notes

·

View notes